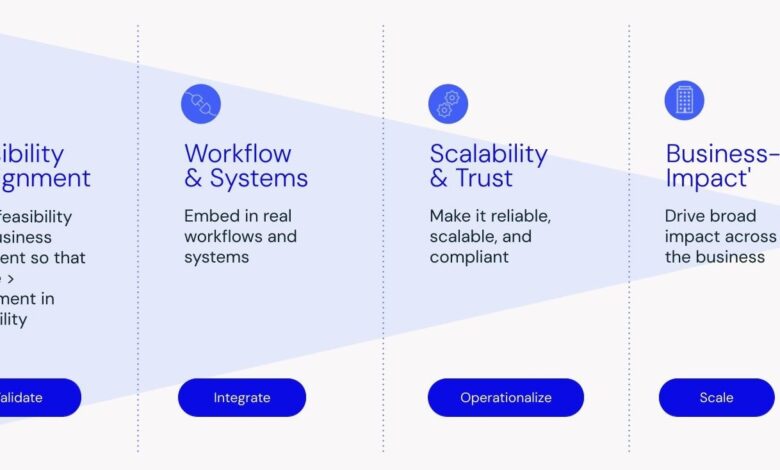

What scaling AI actually requires: 4 stages

Stage 1: Validate

Stage 2: Integrate (Connect to real workflows and systems)

Stage 3: Operationalize (Make it reliable, scalable, and compliant)

Stage 4: Scale (Drive broad impact across the business)

Scaling requires dozens of interdependent decisions across architecture, infrastructure, engineering workflows, organizational design, governance, compliance, team capability, and more.

This first stage is often confused with technical prototyping. In reality, it’s broader. The goal isn’t just to prove that the model works, but to validate that scaling the solution would deliver enough business value to justify the investment of time and resources.

This means validating that:

Once you have validated that the AI initiative is solving a real problem and feasibility is established, the model must move out of the lab and into the real world.

These are the core integration challenges:

These are the kinds of questions that often get ignored during the early POC phase. But in production, they define the actual user experience.

AI integration is a team sport. And the handoffs between teams, or lack thereof, can make or break progress. Sometimes the incentives just aren’t aligned across functions. Sometimes it’s not clear who’s accountable for what. Without cross-functional clarity and alignment, even the most promising model will stall.

This phase is where AI truly becomes part of a larger system. And if it’s not designed to plug into that system effectively, the pilot or POC won’t survive contact with reality.

If your model only works in isolation or relies on a bespoke data setup, it won’t survive contact with production reality

Once an AI initiative is viable and ready to move toward production, the next big step is making the right foundational architecture choices. You want to make sure you are not building a monolith.

For example, if you’re building an AI-powered insights tool, you don’t hardwire the foundation model directly into your app logic. Instead, you break it down:

Why it matters for scaling

Modularity isn’t just an architectural principle. It allows you to build AI systems that evolve, adapt, and scale – without collapsing under their own weight.

As AI projects transition from proof-of-concept to production, it’s not enough for the model to be accurate or promising. It needs to be enterprise-ready: stable, secure, maintainable, and scalable. That means building robust infrastructure and practices around the model.

For real-time use cases like product recommendations or fraud detection, manual server scaling just isn’t viable. You can’t afford to hit usage caps or scramble to manage capacity. Scaling must be elastic, automatically growing and shrinking based on demand, without human intervention. Without elasticity, you either overpay for idle compute, or your system fails under pressure.

In modern software, CI/CD is a given. But AI systems need their own version of this. Updating model versions, prompt templates, retrieval logic. All of it must happen with minimal to no downtime. The key is making sure updates are safe, fast and repeatable.

Data changes. Behavior changes. Regulations change. And when that happens, your model can start to drift. Enterprise AI systems need built-in drift detection and retraining pipelines. For example automatically trigger retraining when accuracy drops or use rollback mechanisms to revert if a new model performs poorly. This is especially critical for high-risk use cases; anything customer-facing, regulation-sensitive, or decision-critical.

The data processing model should fit the use case:

But regardless of speed, data quality is non-negotiable.

Key data infrastructure practices

AI at scale is an ops problem

Without the right foundation, elastic infrastructure, modular design, safe deployment, and solid data pipelines, even the best models won’t succeed at scale.

Successful scaling of AI depends as much on people, roles, and processes as it does on infrastructure. And in most companies, organizational readiness is the weakest link.

Even technically sound AI systems often struggle once they leave the lab. Why?

In other words, the model might work, but the system doesn’t.

Looking to build an AI solution that delivers real business value at scale? Let’s talk.

Amir leads BOI’s global team of product strategists, designers, and engineers in designing and building AI technology that transforms roles, functions, and businesses. Amir loves to solve complex real world challenges that have an immediate impact, and is especially focused on KPI-led software that drives growth and innovation across the top and bottom line. He can often be found (objectively) evaluating and assessing new technologies that could benefit our clients and has launched products with Anthropic, Apple, Netflix, Palantir, Google, Twitch, Bank of America, and others.