The reasons why your POC isn’t scaling (and how to fix it)

Most companies aren’t short on AI ideas, they’re short on scaling discipline. The future belongs to those who scale intentionally.

These are some of the red flags we see time and time again in AI POCs that can signal an upcoming failure of the project:

If your AI pilot was built to solve a very specific problem, it most likely relies on specific data formats, hardcoded variables, and manual workarounds. This might get you a quick demo, but it won’t survive a handoff to other teams, or when the input changes once users start working with your tool.

Many AI pilots run on clean, curated datasets that are small enough to manage manually. At scale, things get messy, and data infrastructure becomes a product of its own. You will be dealing with challenges like unpredictable user input or the bandwidth demand of real-time data querying.

AI at scale is more than a one-time deployment; it requires very specific orchestration.

You don’t want your business-critical model to break because someone manually updated it on a Thursday and forgot to test edge cases.

Once a model goes live, it will face conditions it was never trained on. Drift is inevitable at this stage. What matters is how fast and how safely you can respond, which depends on your solution’s drift-detection capabilities. Without real-time monitoring, alerting, and retraining triggers, teams often discover issues only after they’ve caused negative business impact.

A technically brilliant model can still fail if people don’t trust it. That trust isn’t earned by accuracy scores alone, it has to be earned by transparency, accountability, and control. Without built-in explainability, bias monitoring, and access controls, your model might be right but unusable.

Scaling AI means ensuring the solution is ready for all regulatory scrutiny, such as GDPR, HIPAA, or other regional standards. Every scaled AI system is also a new attack surface. If your model is exposed via an API, accepts user inputs, or interacts with sensitive data, it needs to be protected. At scale, your processes should include strict safeguards against common attacks and security weaknesses

AI at scale doesn’t run on autopilot. It requires a supporting cast of specialized roles to maintain, govern, and improve systems over time. These include:

If these roles don’t exist (or if responsibilities are unclear), AI solutions become orphaned after deployment. No one owns them → no one updates them → no one trusts them.

AI lives at the intersection of strategy, product, engineering, and compliance. That makes collaboration across teams essential. The most scalable AI solutions aren’t built in silos. They emerge from teams where:

When alignment is missing, you get AI tools no one wants, no one understands, or no one can safely use.

The most common adoption failures aren’t technical but behavioral:

To overcome these, you need more than onboarding. You need organizational fluency: shared language, clear expectations, and a culture that sees AI as a tool to be used and improved, not feared or misunderstood.

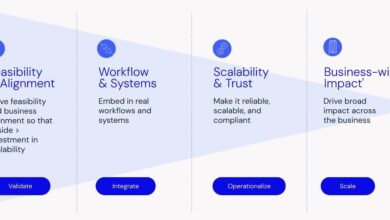

Let’s recap what it really takes to scale:

Scaling AI isn’t a finish line to reach at the end of the development stage. It’s a capability. And like any core capability, it only delivers when it’s structured, supported, and intentionally embedded into how the business operates, supported by a clear AI strategy.

Looking to build an AI solution that delivers real business value at scale? Let’s talk.

Amir leads BOI’s global team of product strategists, designers, and engineers in designing and building AI technology that transforms roles, functions, and businesses. Amir loves to solve complex real world challenges that have an immediate impact, and is especially focused on KPI-led software that drives growth and innovation across the top and bottom line. He can often be found (objectively) evaluating and assessing new technologies that could benefit our clients and has launched products with Anthropic, Apple, Netflix, Palantir, Google, Twitch, Bank of America, and others.